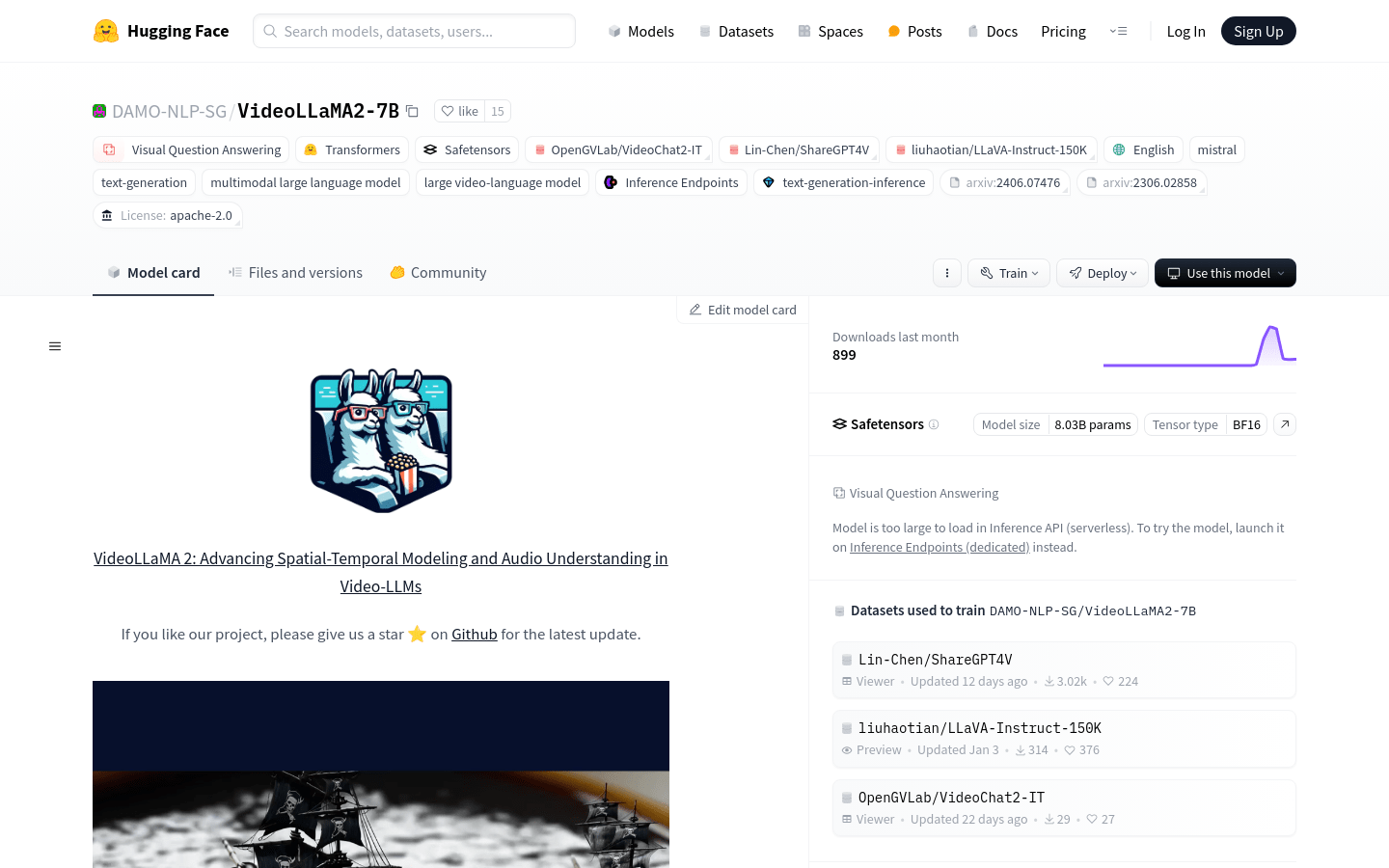

VideoLLaMA2-7B

Large video-language model that provides visual question-and-answer and video captioning generation.

Tags:Ai video toolsAI video caption generator Ai video toolsPreview:

Introduce:

VideoLLaMA2-7B is a multimodal large-scale language model developed by the DAMO-NLP-SG team that focuses on the understanding and generation of video content. The model has remarkable performance in visual question answering and video captioning generation, and can process complex video content and generate accurate and natural language descriptions. It is optimized for spatial-temporal modeling and audio understanding, providing powerful support for intelligent analysis and processing of video content.

Stakeholders:

VideoLLaMA2-7B is primarily aimed at researchers and developers who need in-depth analysis and understanding of video content, such as video content recommendation systems, intelligent surveillance, autonomous driving and other fields. It can help users extract valuable information from videos and improve decision-making efficiency.

Usage Scenario Examples:

- Automatically generate engaging captions for videos uploaded by users on social media.

- In the field of education, interactive question-and-answer functions are provided for instructional videos to enhance the learning experience.

- In security monitoring, video Q&A is used to quickly locate critical events and improve response speed.

The features of the tool:

- Visual question answering: The model is able to understand the video content and answer relevant questions.

- Video captions generation: Automatically generates descriptive captions for videos.

- Spatial-temporal modeling: Optimizing the model’s understanding of object movement and event development in video content.

- Audio understanding: Improve the model’s ability to parse audio information in video.

- Multimodal interaction: Combine visual and verbal information to provide a richer interactive experience.

- Model inference: Supports efficient model inference on dedicated inference endpoints.

Steps for Use:

- Step 1: Visit the Hugging Face model page of VideoLLaMA2-7B.

- Step 2: Download or clone the code base of the model and prepare the environment required for model training and inference.

- Step 3: Load the pre-trained model and configure it according to the sample code provided.

- Step 4: Prepare the video data and perform the necessary pre-processing, such as video frame extraction and sizing.

- Step 5: Use the model for video Q&A or captioning generation, get the results and evaluate.

- Step 6: Adjust model parameters as needed to optimize performance.

- Step 7: Integrate the model into a practical application to automate video content analysis.

Tool’s Tabs: Video understanding, language models