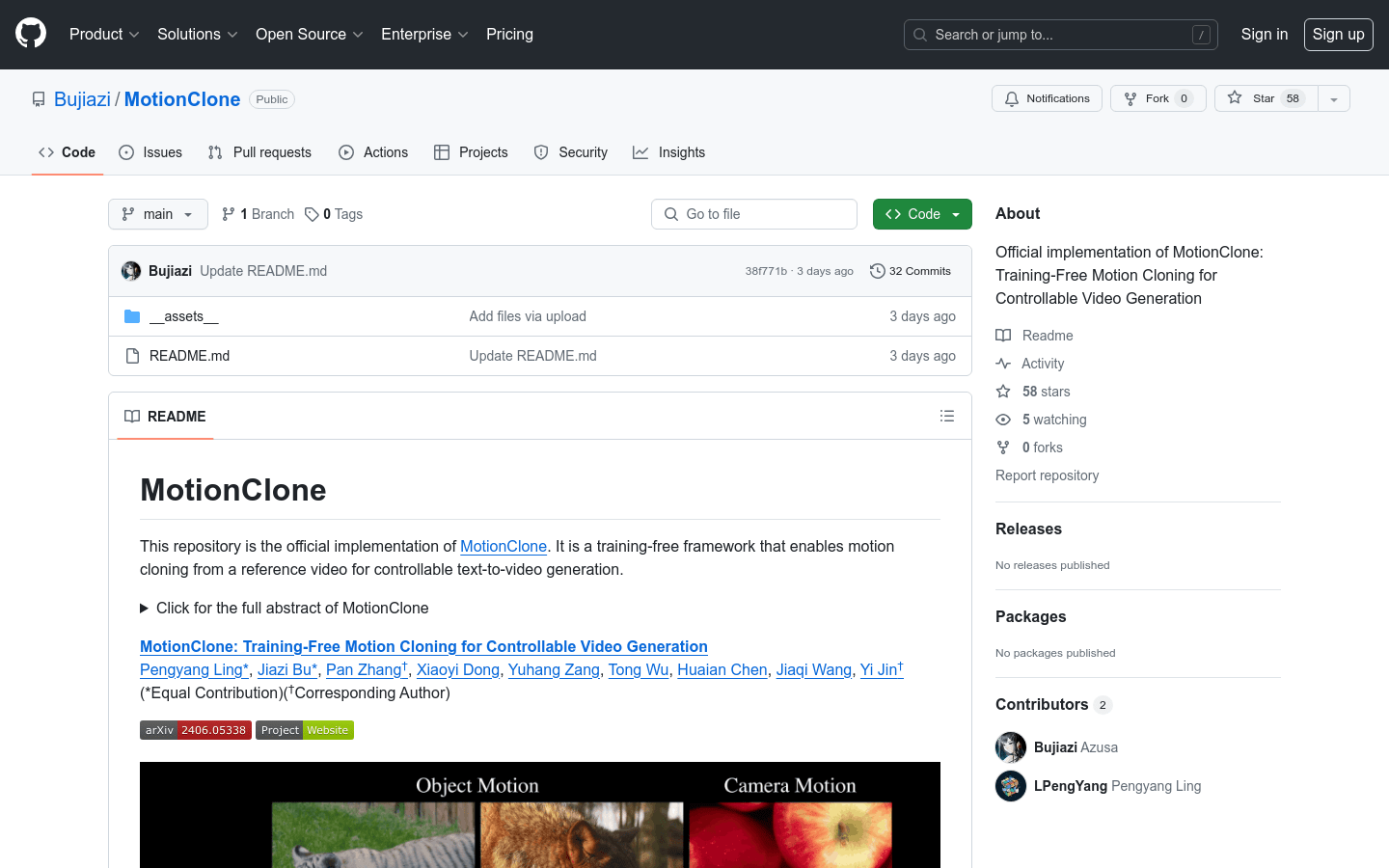

MotionClone

Train unrelated motion clones for controlled video generation

Tags:Ai video toolsAI video generation toolsPreview:

Introduce:

MotionClone is a train-independent framework that allows motion cloning from a reference video to control the generation of text to video. It utilizes temporal attention mechanisms to represent motion in reference videos in video inversion, and introduces master temporal attention guidance to mitigate the effects of noisy or very subtle movements in attention weights. In addition, in order to assist the generation model to synthesize reasonable spatial relationships and enhance its prompt following ability, a location-aware semantic guidance mechanism using the foreground rough position in the reference video and the original classifier free guidance features is proposed.

Stakeholders:

MotionClone is suitable for video producers, animators, and researchers because it provides a way to quickly generate video content without training. Especially for professionals who need to generate videos based on specific text prompts, MotionClone provides an efficient and flexible tool.

Usage Scenario Examples:

- Animators use MotionClone to quickly generate animated video sketches from scripts

- Video producers use MotionClone to generate preliminary versions of video content from scripts

- Researchers use MotionClone for research and development of video generation technology

The features of the tool:

- Clone movement from reference video without training

- Use a time-attention mechanism to represent motion in a video

- Primary time attention guidance mitigated the effects of noise or subtle movements

- The location-aware semantic guidance mechanism helps to generate reasonable spatial relationships

- Enhance the prompt following ability of video generation model

- Suitable for text-to-video controlled generation

Steps for Use:

- 1. Set up the code base and conda environment

- 2. Download Stable Diffusion V1.5

- 3. Prepare community models, including the community downloaded from RealisticVision V5.1.safetensors model

- 4. Prepare the AnimateDiff motion module. You are advised to download v3_adaliter_sd_v15.cklit and v3_sd15_mm.cklit.cklit

- 5. Perform DDIM inversion

- 6. Perform motion cloning

- 7. Cite papers related to MotionClone if needed

Tool’s Tabs: Video generation, motion cloning